Hi! I am a second year EECS PhD student at UC Berkeley working with Sergey Levine. I previously did my undergraduate degree at the University of Toronto in Engineering Science (Robotics) advised by Tim Barfoot. My research focuses on generalist robot navigation and interfacing with robots through natural language. I am also interested in mobile manipulation and pushing the boundaries of leveraging foundation models and non-robot data to improve robot learning.

I will be interning at Physical Intelligence in summer 2025.

Hi! I am a second year PhD student at UC Berkeley working with Sergey Levine. I previously did my undergraduate degree at the University of Toronto in Engineering Science (Robotics) advised by Tim Barfoot. My research focuses on generalist robot navigation and interfacing with robots through natural language. I am also interested in mobile manipulation and pushing the boundaries of leveraging foundation models and non-robot data to improve robot learning.

I will be interning at Physical Intelligence in summer 2025.

Publications

Here is a list of my publications to date. Please feel free to reach out with questions!

-

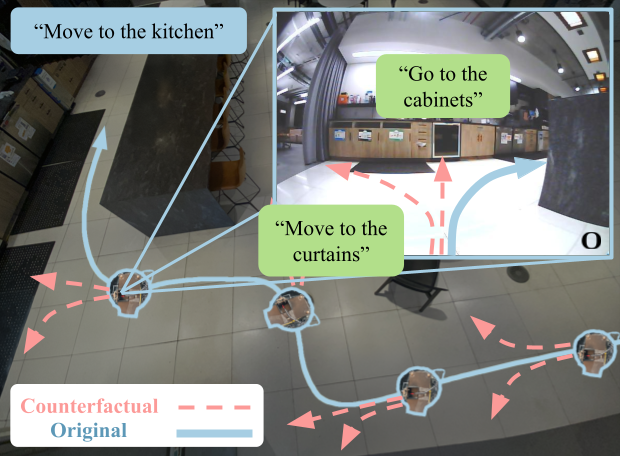

CAST: Counterfactual Labels Improve Instruction Following in Vision-Language-Action Models

ArXiV pre-print

TLDR:We introduce CAST, a method for augmenting robotics datasets with counterfactual trajectories to improve language following in vision-language-action models@misc{glossop2025castcounterfactuallabelsimprove, title={CAST: Counterfactual Labels Improve Instruction Following in Vision-Language-Action Models}, author={Catherine Glossop and William Chen and Arjun Bhorkar and Dhruv Shah and Sergey Levine}, year={2025}, eprint={2508.13446}, archivePrefix={arXiv}, primaryClass={cs.RO}, url={https://arxiv.org/abs/2508.13446}, } -

Learning to Drive Anywhere with Model-Based Reannotation

ArXiV pre-print

-

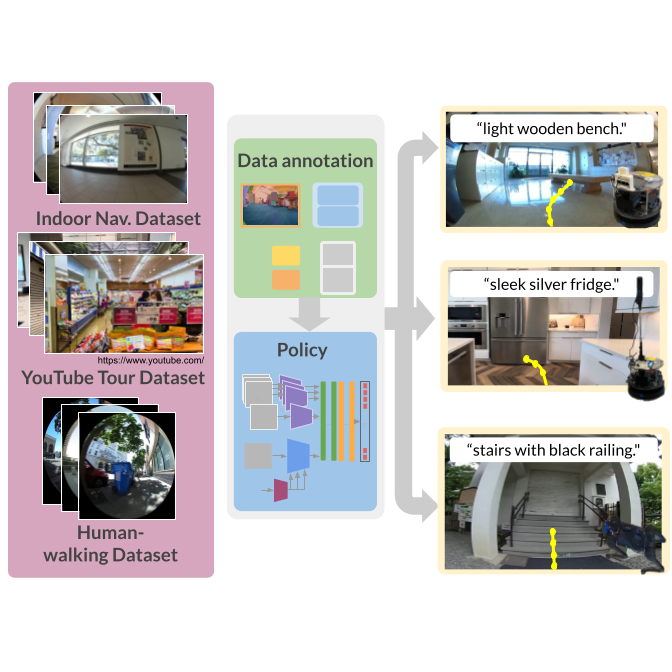

LeLaN: Learning A Language-Conditioned Navigation Policy from In-the-Wild Videos

CoRL 2024

TLDR: We annotated 100s of hours of in-the-wild video using a self-supervised pipeline to train a robust language-conditioned last-mile navigation policy@inproceedings{hirose24lelan, title={LeLaN: Learning A Language-Conditioned Navigation Policy from In-the-Wild Video}, author={Noriaki Hirose and Catherine Glossop and Ajay Sridhar and Dhruv Shah and Oier Mees and Sergey Levine}, booktitle={Conference on Robot Learning}, year={2024} } -

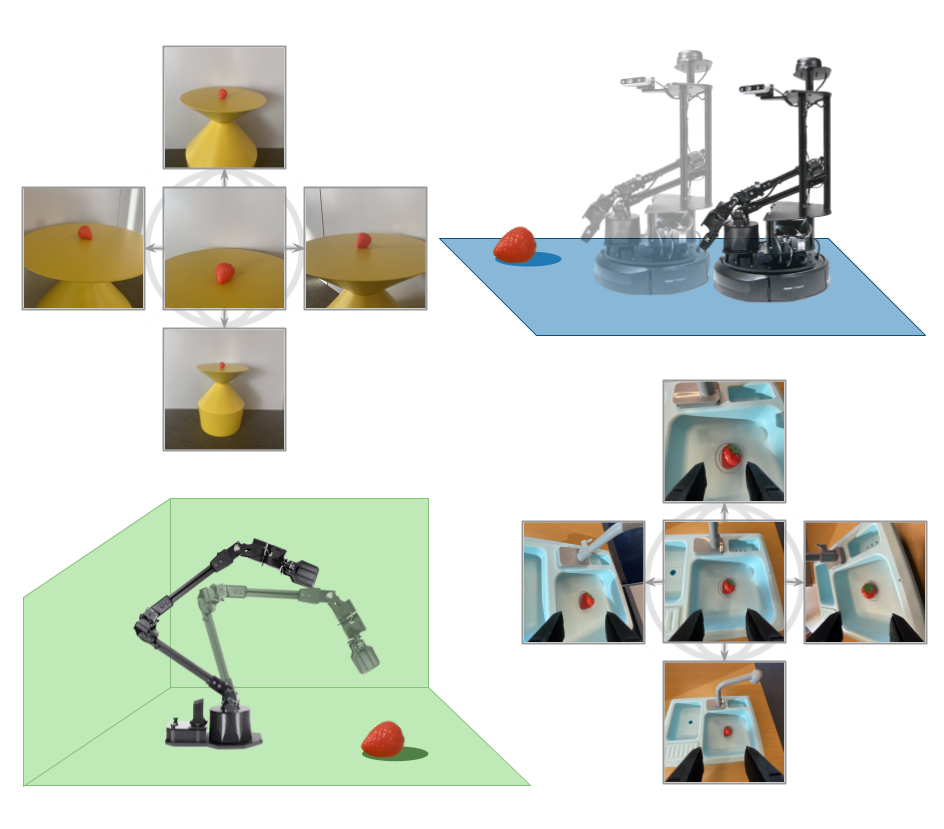

Pushing the Limits of Cross-Embodiment Learning for Manipulation and Navigation

RSS 2024

TLDR: We train a single policy that can perform both navigation and manipulation tasks on data from vastly different embodiments (including wheeled robots, quadrupeds, various manipulators, and self-driving vehicles).@article{yang2024pushing, title={Pushing the Limits of Cross-Embodiment Learning for Manipulation and Navigation}, author={Yang, Jonathan and Glossop, Catherine and Bhorkar, Arjun and Shah, Dhruv and Vuong, Quan and Finn, Chelsea and Sadigh, Dorsa and Levine, Sergey}, journal={arXiv e-prints}, pages={arXiv--2402}, year={2024} } -

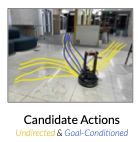

NoMaD: Goal Masking Diffusion Policies for Navigation and Exploration

ICRA 2024 (Best Paper Award)

TLDR: We train a single diffusion policy on robot navigation data across different embodiments and environments that can perform both goal-oriented and goal-agnostic navigation.@article{sridhar2023nomad, author = {Ajay Sridhar and Dhruv Shah and Catherine Glossop and Sergey Levine}, title = {{NoMaD: Goal Masked Diffusion Policies for Navigation and Exploration}}, journal = {arXiv pre-print}, year = {2023}, url = {https://arxiv.org/abs/2310.07896} } -

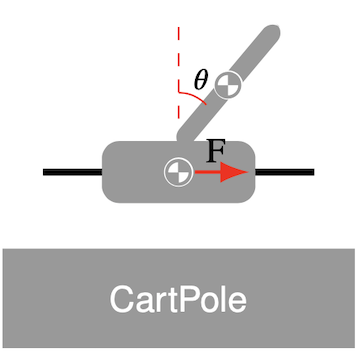

Characterising the Robustness of Reinforcement Learning for Continuous Control using Disturbance Injection

NeurIPS 2022 TEA and DistShift Workshops

TLDR: We empirically evaluate the robustness of different reinforcement learning algorithms to action, observation, and environmental disturbances.@misc{glossop2022characterisingrobustnessreinforcementlearning, title={Characterising the Robustness of Reinforcement Learning for Continuous Control using Disturbance Injection}, author={Catherine R. Glossop and Jacopo Panerati and Amrit Krishnan and Zhaocong Yuan and Angela P. Schoellig}, year={2022}, eprint={2210.15199}, archivePrefix={arXiv}, primaryClass={cs.RO}, url={https://arxiv.org/abs/2210.15199}, }